Introduction

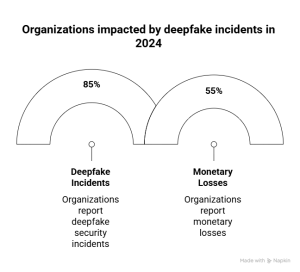

This week, new industry data confirms what security teams have feared: deepfake-enabled fraud is accelerating, and many organizations remain underprepared. According to the latest AI Deepfake Threat Report, 85% of mid-to-large organizations experienced at least one deepfake incident in the past year, and over half suffered financial losses averaging $280,000+. IT Pro+1

For CISOs and security leaders, this is a red alert. These attacks are no longer hypothetical experiments, they are striking real business workflows: video calls, chat, shared files, email threads, and vendor interactions. To stay protected, security must shift from defending a “network perimeter” to securing the entire workspace.

Below I lay out:

- Verified trends and data in deepfake fraud

- How these risks map into workspace security

- What a workspace-first defense must do

- Action steps for CISOs today

1. Verified Trends & Data in Deepfake Fraud

Deepfake Incidents & Costs

- The 2025 AI Deepfake Threat Report reveals 85% of organizations reported at least one deepfake incident in the prior year. IT Pro

- Of those impacted, 55% reported monetary losses, with an average loss over $280,000. IRONSCALES+1

- Losses are far from trivial – some organizations lost $500,000 or more, and a smaller subset lost over $1 million. IRONSCALES+1

- While 94% of IT/security leaders indicate concern about deepfakes, only a minority are confident in their defenses. IRONSCALES+3IRONSCALES+3Security Boulevard+3

Detection Gaps & Confidence Illusions

- In simulated detection exercises, organizations’ average detection accuracy is only ~44%, and only ~8% exceed 80% in first-try detection. Security Boulevard+2IT Pro+2

- Despite the increasing number of organizations offering training on deepfake awareness (e.g. 88% now do), the gap between awareness and actual detection capability remains large. IT Pro+2Security Boulevard+2

- According to Dark Reading, 71% of organizations plan to prioritize deepfake defense in the next 12-18 months – yet many have made little or no investment today. Dark Reading+1

Survey Corroboration

- A Gartner/Infosecurity report (via KnowBe4) found 62% of organizations were targeted by deepfake attacks in the past year. KnowBe4 Blog

- In manufacturing sectors specifically, fewer than one in three executives feel prepared for deepfake or AI-powered threats. Industrial Cyber

All this data points to a looming mismatch: attackers are embracing synthetic media techniques quickly, while defenses lag.

2. Why Deepfake Risk Is a Workspace Problem

Deepfakes don’t just break email – they break trust inside business workflows. Here’s how workspace risk plays into this.

Video / Real-Time Calls

An attacker can synthesize a face or voice in real-time on a meeting call, impersonating a known executive. The target believes it’s legitimate and may comply with sensitive requests (financial, credential sharing, system changes).

Chat / Messaging

Impersonation via chat is especially dangerous because it’s fast, familiar, and often trusted. Deepfake avatars or voice notes may amplify believability.

Email + Multi-Channel Attacks

Deepfakes can complement BEC (Business Email Compromise) attacks: e.g. a phony video message instructing an employee to carry out a wire transfer that was requested via email.

Collaboration & Shared Files

Once inside collaboration spaces, attackers may establish footholds, drop malicious documents, or manipulate shared workflows under the guise of legitimate identities (vendors, partners, executives).

Vendor / Supply Chain Entry

A trusted vendor or third party is often the bridge into an organization. Deepfake impersonation of vendor reps (via calls, emails, chats) can bypass trust-based access and lead to infiltration inside the workspace ecosystem.

In short: the attack surface is no longer just “outside → in.” It’s “through the workspace channels themselves.”

3. What a Workspace-First Defense Must Do

To defend against deepfake fraud embedded in workflows, a modern defense must evolve in these directions:

A. Multi-Modal Deepfake Detection

- Visual / video-level artifact detection (pixel inconsistencies, frame manipulation)

- Audio / voice anomaly analysis (tone, cadence, identity signatures)

- Behavioral / contextual cues (unexpected requests, out-of-sequence instructions)

Combining these modes is critical – deepfakes are subtle and can camouflage in a single channel.

B. Cross-Channel Correlation & Investigation

- Link alerts across email, chat, video, file sharing, CRM, and vendor logs

- Build an “attack narrative” – for example, piecing together a fake video call with suspicious invoice changes or chat instructions

- Automate threat enrichment (context, history, identity verification) to reduce noise and prioritize high-fidelity alerts

C. Vendor / Supply Chain Monitoring

- Monitor third-party interactions across channels

- Flag anomalous vendor behavior (new access patterns, deviations in communication style)

- Enforce just-in-time, least-privilege access and verification for external actors

D. Layered Controls Beyond Training

- Deepfake-aware training and awareness is necessary, but insufficient alone

- Use checkpoint verification (e.g. out-of-band confirmation, video authentication) for high-risk requests

- Ensure policies that prevent unilateral changes without escalation

E. Metrics & KPIs Aligned With the Threat

- Detection & response time for deepfake-enabled events

- Cross-channel escalation rates

- False-positive vs true detection rates

- Vendor-originated deepfake alerts

- Reduction in reliance on manual identification

4. Action Steps for CISOs Today

If your org hasn’t yet taken deepfake risk seriously at the workspace level, here’s a short action playbook:

- Run internal simulations

Test video + audio deepfake scenarios, see whether your teams detect or misinterpret them. - Audit existing channel visibility

Ensure you have logs and telemetry from video, chat, file, email, CRM. Without visibility, detection is blind. - Adopt detection & correlation tools

Look for solutions that integrate cross-channel analysis and automated threat story building (not just isolated alerts). - Fortify vendor interactions

Validate every vendor request (especially changes) via dual channels. Monitor vendor behavior anomalies closely. - Update training programs

Include synthetic media awareness, scenario-based labs, and escalation protocols for suspicious requests. - Define new KPIs

Align your security metrics to multidimensional detection and automated investigations – not just “number of blocked emails.”

Conclusion

The data confirms a tipping point: synthetic media fraud is now a pervasive threat, and many organizations are caught off guard. Deepfake attacks no longer target obscure vectors – they attack the very tools where business is done.

For CISOs, the answer lies in evolving security from perimeters to workspaces. Detecting across video, voice, chat, files, and vendor touchpoints isn’t optional – it’s essential.

Cyvore’s vision corresponds directly to that shift, and any security strategy yet to integrate multi-modal deepfake detection and cross-channel correlation is at risk of being outpaced.

If you’re rethinking your defense architecture for 2026, the first question shouldn’t be “Do we protect email?” – but “Does our security protect how we communicate and work today?”